In this article, I will talk about a method to streamline guest posting and share a piece of code I’ve written to improve the process. It allowed me to generate a very targeted list of around 100 guest post opportunities in a few hours. I hope you’ll enjoy.

If you’re not familiar with guest posting, it’s about publishing content on other sites that accept content from guest authors, people that aren’t from their team. The site gets quality content in exchange for some sort of promotion of the author’s own website or products.

By guest posting, you can talk to a specific audience — people reading the site you’re writing for — and get some visibility. In SEO, we go after links in the content to create backlinks which help increasing visibility in the organic results pages.

A few months ago I stumbled upon a series of video tutorials detailing how to streamline finding and sorting out guest post opportunities using Google Sheets.

It’s very accessible, easy to understand and powerful. I strongly recommend you check it out if you’re interested in guest posting:

https://www.facebook.com/pg/theseoproject/videos/

The degree of automation explained in this tutorial is really impressive and the heavy use of Google Sheets is right up my alley.

So anyway, I tested it out and loved it. It allows you to rank opportunities by relevancy and traffic to generate both backlinks and targeted traffic. You can determine priorities to maximise the efficiency of your guest posting campaigns.

Improving the process

At the end of the tutorial series, there’s a part where a VA is required to collect the number of pages indexed in Google for specific advanced requests.

I have nothing against using VAs but I’m biased when it comes to very repetitive, low-value tasks: I think the human brain, no matter its hourly rate, should be used for stuff it’s uniquely fit to do and that the rest should be automated.

In addition, introducing a VA complicates a pretty straightforward process: all of a sudden you need to manage people, you need to spend money and time on recruiting and training. You also risk a communication problem or any other type of unforeseen complication.

This is why I thought this could be a good opportunity to work on a very small scraping project in Python.

Usage

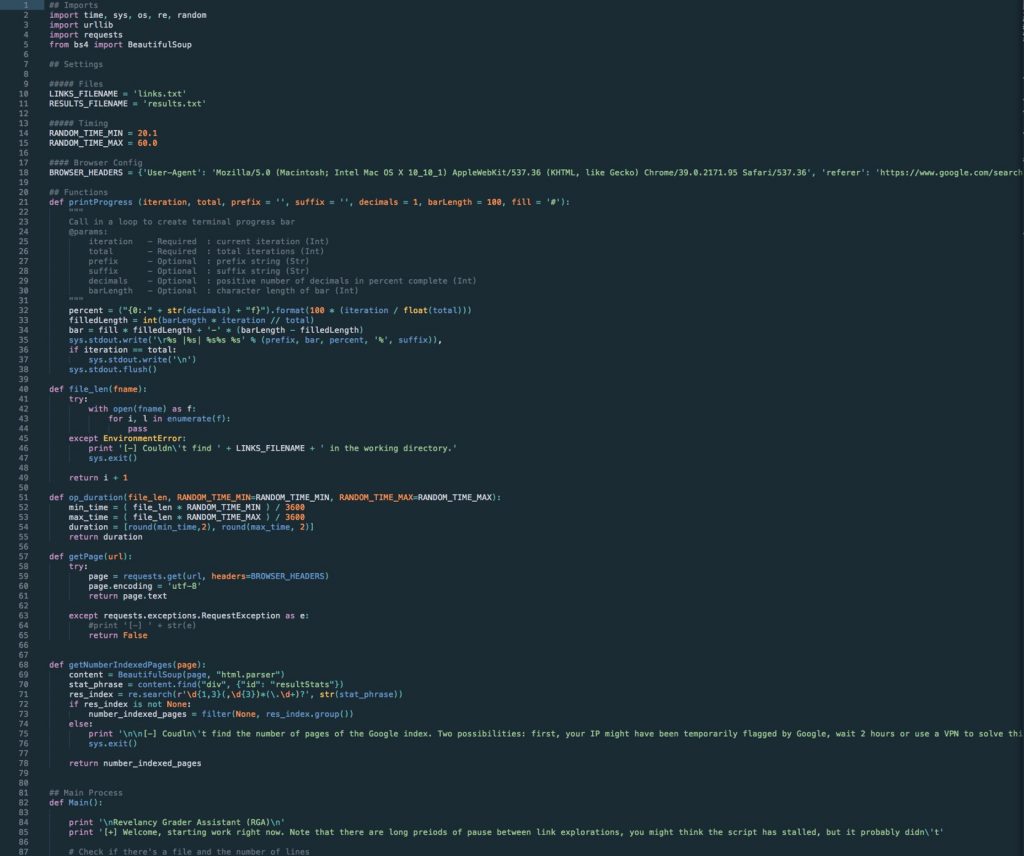

I wanted it to be very very simple to use. Assuming you have all you need to run a Python 2.7 script on your computer and required packages ( requests and BeautifulSoup4 ), here’s how to use it:

- create a links.txt file where you dump all the links from one column of your Google Sheets

- execute RGA.py (RGA stands for Relevancy Grader Assistant, as the script helps with the relevancy grading phase)

- once it’s done, copy and paste the content of results.txt into the spreadsheet and let it work out the score

Easy, without having someone losing his or her mind over copy and paste gigs.

The script

The full script is available on GitHub right here.

Lessons learnt

It’s quite obvious but the first thing to remember from this is to make your script look like a real user. Make it look like a human using Google basically. Every little bit helps:

- use a real user agent headers from a popular web browser

- if you can, actually click buttons, don’t just access weird, complicated URL directly when nobody could type that stuff in their browser

- randomise delays, we’re no robots, we’re not as consistent so introduce a bit of variability

The real lesson was that you really can take your time with this type of operations. Your computer works for you, allowing you to work on the rest of the tasks. For a guest post campaign, you can for instance work on your outreach sequence, content planning, etc. while the script is finishing collecting data.

Basically, it’s better to be slow but fully hands-off rather than fast, potentially have a lot of data in very little time but having to check every 10 minutes if we got busted and have to change the IP address.

Script Update, March 2017

Waiting 8 hours to get the job done can be a bit frustrating. Especially if your IP get flagged during the night and the script stops. Switching between IPs using VPNs is also not the cleanest way to operate — it works, but it’s not the best usage experience I can provide.

So I’ve added the possibility to use proxies to the script. By simply adding a “proxies.txt” file in the working directory, the script will create a thread by proxy and route its requests through them.

It allowed me to experiment with multithreading in Python, which was fun. If you’re curious about it like I was, you can have a look here: https://www.tutorialspoint.com/python/python_multithreading.htm

As far as using proxies, I thought it would be much more complicated than what I ended up doing. I’m still using the Requests package and it handles proxies very well. Check out this page for more info: http://docs.python-requests.org/en/master/user/advanced/

Fun additional feature: I’m also using the Fake Useragent package to add some variety in the type of browser I emulate. There are need methods in this package like “.random” that will generate browsers randomly based on worldwide usage. Pretty impressive, although I ended up randomising between the main desktop browser: I suspect nobody does complex advanced queries from their smartphones.

Now, it obviously looked like I had great fun experimenting with all of this… But I have to say the results are less reliable than they use to, hence the updated “Future improvements” section below. It seems like I get flagged more often. Each thread (so each proxy IP) get flagged way quicker than when I was connecting everything through a VPN.

Future improvements

I need to improve two things on the current version of the script:

- First, I need to find out how to reduce the amount of time an IP gets flagged. I need to investigate whether it comes from the proxies IP (by doing tests with dedicated vs. semi-dedicated ones). There also the possibility that bay using a big VPN provider like PIA, my advanced search queries represents just a few portions of the total amount of queries and are much more discreet than with dedicated proxies…

- A more flexible way to write results. A CSV file would make more sense but to do this I need to figure out if the import would work correctly with Josh’s Google Sheets system to grade opportunities

Conclusion

So here you have it: a way to completely automate most of the tasks in the guest post strategy from this series of video. I hope it helps some of you, it sure was a fun Saturday project.